Autonomous Vehicles: the tech unicorn we all love to debate. But what are they, really, and how do they manage to get from Point A to Point B without any help from a human driver? Let’s break this down in simple terms—after all, it’s not exactly magic, though it might seem that way at times.

A self-driving car, also known as an autonomous vehicle, uses a combination of sensors, cameras, radar, and artificial intelligence to travel between destinations without a human needing to touch the wheel. The car’s artificial intelligence (AI) makes decisions in real-time, continuously analyzing its surroundings to respond to conditions like traffic lights, road signs, or unpredictable pedestrians darting out into the street. The role of AI in autonomous navigation is crucial, transforming what might otherwise be just a dumb box on wheels into a highly aware driver.

What makes a car self-driving? It’s all about the system—cameras for vision, LiDAR (light detection and ranging) for mapping, and Vehicle-to-Everything (V2X) communication to talk to other smart devices, from traffic signals to other cars. These components, meshed together with powerful processors and data-crunching algorithms, allow autonomous cars to create a 3D model of the world around them, constantly navigating their path forward.

The Technology Behind Driverless Vehicles

So, how does the whole tech cocktail behind driverless vehicles work? At its core, a self-driving car relies on an intricate interplay of sensors, cameras, LiDAR, radar, and the car’s AI brain. Let’s explore this deeper.

Overview Of Sensors: LiDAR, Radar, And Cameras

LiDAR is essentially the eyes of the vehicle—it uses laser light to create a 3D map of the surroundings. This data allows the car to understand what’s around it, like buildings, pedestrians, or other vehicles. Think of it like a constantly-updating virtual reality map of the environment.

Radar complements LiDAR by measuring distances and detecting moving objects. For example, radar can help spot another car approaching fast, which might be less clear with cameras alone. Cameras, meanwhile, are the most visually intuitive, providing data that helps the car interpret road signs, lane markings, or distinguish a person crossing the road. Together, these sensors form a comprehensive view of the world.

How Artificial Intelligence Processes Driving Data

Once all the data is collected, artificial intelligence steps in to make sense of it. First, AI processes the information from LiDAR, radar, and cameras to create a coherent image of the surroundings. This real-time image helps the car understand its environment, including objects, road markings, and pedestrians.

After creating the image, the AI uses advanced algorithms to predict the behavior of nearby objects. For instance, it anticipates whether a pedestrian might step into the road or if another vehicle is changing lanes. These predictions need to be fast and accurate, allowing the car to react instantly to changes.

Finally, based on the predictions, the AI makes split-second decisions. It decides when to accelerate, brake, or steer, and all of this happens without human intervention. This decision-making process is comparable to how a human driver would react but with faster reflexes and an immense capacity for analyzing traffic rules.

Once data is gathered, the real magic happens: AI processing. The car’s AI has to analyze all the sensory data, make predictions about the behavior of nearby objects, and make decisions—sometimes in a fraction of a second. It’s like your brain driving, but with superhuman reflexes and a way better memory for traffic rules.

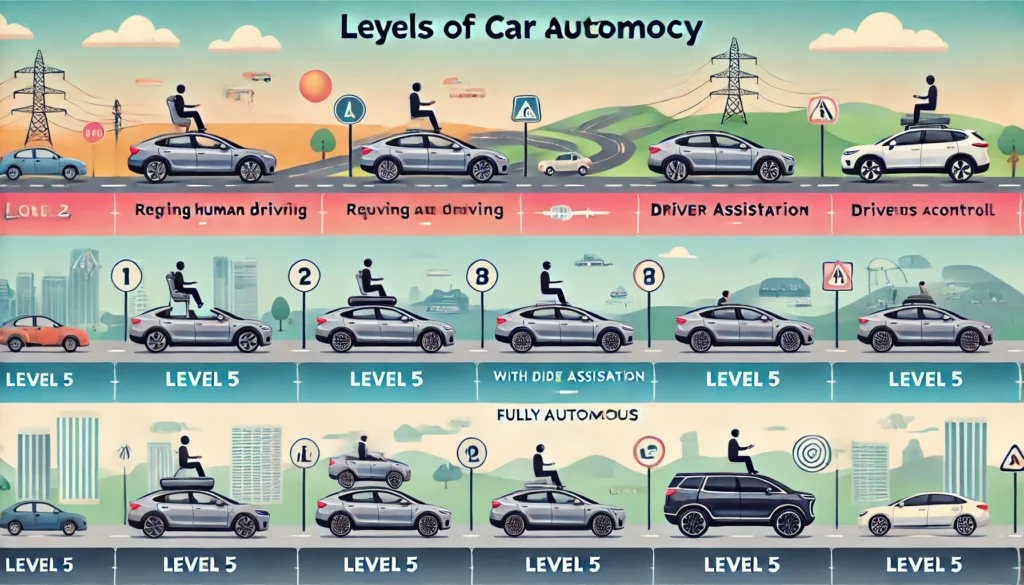

Understanding The Different Levels Of Car Autonomy

If you’ve been following the self-driving hype, you might’ve heard the term “levels of autonomy.” This concept is important, and it’s not just industry jargon—it actually helps define what a self-driving car can and cannot do.

Levels 0 To 5 Explained: From Manual To Fully Autonomous

The levels range from 0 to 5. Level 0 is your good old-fashioned manual car, where you do all the work. Levels 1 to 3 add in features like adaptive cruise control or lane-keeping assistance—meaning the car can help but you’re still the boss. At Level 4, things get exciting: the car can drive itself under specific conditions without human intervention, but it may still need a human in tricky situations. Level 5 is the golden goose—a fully autonomous vehicle that can handle all aspects of driving, in all environments, without any need for human backup.

Current Status Of Level 5 Autonomy

Spoiler alert: we’re not quite there yet. Level 5 autonomy is still in the experimental phase. Real-world complexities remain challenging, such as unpredictable weather, poorly marked roads, and human drivers.

One major issue is dealing with unexpected weather changes. Heavy rain or snow can obscure sensors, reducing the car’s ability to navigate effectively. Moreover, many roads are poorly marked, which complicates the AI’s task of detecting lanes and making decisions.

Lastly, human drivers add another layer of unpredictability. Unlike machines, human behavior on the road can be erratic and often doesn’t follow the rules. These challenges are significant, and overcoming them is key to reaching full autonomy.

Key Components Of Autonomous Car Systems

To really understand self-driving cars, it helps to zoom in on the key components that make them tick. A car is just a big hunk of metal without its tech.

Sensors And Their Role In Environment Detection

The sensors are critical for understanding the car’s environment. LiDAR, cameras, and radar work together to gather crucial information. These sensors allow the car to “see” its surroundings, creating an accurate image of the world.

LiDAR is essential for mapping the car’s environment. It helps in detecting obstacles, like buildings or pedestrians, by using laser light. Meanwhile, radar plays a different role by measuring distances and detecting the speed of moving objects, which is vital for safe maneuvering.

Additional sensors provide other critical data, like road surface conditions and the vehicle’s own status. For example, these sensors help monitor tire pressure or road bumps, allowing the car to adjust accordingly. Together, this network of sensors enables a well-rounded, comprehensive understanding of the car’s environment, ensuring a safer and more efficient driving experience.

Vehicle-To-Everything (V2X) Communication And Data Sharing

Another crucial component is V2X communication. This involves the car sharing information with external systems—think of it as the car talking to traffic signals, road sensors, or even other vehicles. By communicating with external infrastructure, the car gains a better understanding of road conditions and potential hazards.

V2X communication allows for proactive responses to changes in traffic conditions. For instance, if a traffic light is about to turn red, the vehicle can adjust its speed accordingly. This real-time data sharing can lead to more efficient driving behaviors and reduce sudden braking or acceleration.

This level of connectivity also improves overall traffic management. Cars equipped with V2X can coordinate with each other, reducing congestion and enabling smoother routing. As more vehicles adopt this technology, we could see a significant reduction in accidents and an improvement in road safety.

Benefits Of Autonomous Vehicles: Safety, Efficiency, And More

The biggest selling point for self-driving cars is safety. Human error accounts for roughly 90% of traffic accidents—and let’s face it, we’re all guilty of checking our phones at a red light. Autonomous vehicles don’t get distracted, drowsy, or tipsy, potentially reducing accidents significantly.

Increased Efficiency And Reduced Traffic

Self-driving cars also promise more efficient traffic management. Imagine fewer traffic jams because cars communicate and coordinate movements seamlessly. When vehicles share data, they can adjust speeds and routes to avoid congestion.

This interconnectedness leads to fewer sudden stops and starts. Cars can optimize their movements, resulting in smoother driving patterns across the board. With reduced stop-and-go driving, fuel consumption decreases, which means lower emissions. No more unnecessary revving or brake-slamming.

Moreover, autonomous vehicles improve traffic flow by predicting and reacting to traffic conditions instantly. By optimizing routes and reducing human error, these cars help minimize delays. Consequently, this efficient traffic flow not only reduces travel time but also makes journeys more predictable. It creates a cleaner environment, offering a significant reduction in pollution levels.

Current Development Status And Companies Leading The Way

Leading Companies Like Tesla, Waymo, And Cruise

There’s a fierce competition among tech giants and automakers to be the first to master driverless technology. Tesla’s Autopilot and Full Self-Driving (FSD) Beta get a lot of media buzz. Meanwhile, Waymo, an Alphabet subsidiary, and Cruise, backed by GM, are also significant players.

These companies are at the forefront of innovation, conducting trials to refine their autonomous systems. For example, Waymo has rolled out autonomous taxis in Phoenix, while Cruise has started testing in San Francisco. Their efforts illustrate that the dream of fully driverless cars is becoming more tangible every day.

Moreover, these trials aren’t just publicity stunts—they are critical in understanding real-world challenges. By operating in select cities, these companies are collecting valuable data to improve safety and performance. This proves, if nothing else, that the self-driving dream is inching closer to reality and isn’t just sci-fi anymore.

Countries At The Forefront Of Autonomous Vehicles Testing

The United States, China, and parts of Europe are leading in self-driving car testing. Cities like Phoenix, Arizona, and Shenzhen, China, are allowing autonomous taxis to roam their streets. These cities serve as testing grounds, providing valuable insights into real-world conditions.

Regulatory support is a major factor in making this possible. Governments are experimenting with ways to incorporate autonomous vehicles into existing transportation frameworks. This support includes creating policies, safety standards, and even infrastructure to facilitate testing and deployment.

Moreover, cities participating in these tests are also setting precedents for others. They demonstrate how urban areas can adapt to integrate new technology, balancing safety with innovation. By tackling regulatory challenges early, these leading regions pave the way for more widespread adoption of autonomous vehicles in the future.

Challenges And Limitations Of Driverless Technology

Technical Challenges: Complex Environments And Sensor Limitations

It’s not all smooth roads. Complex urban environments still pose significant challenges for driverless technology. A driverless car can easily handle a well-organized suburban street, where conditions are predictable.

However, downtown intersections are a completely different scenario. These areas have constant activity, with cars, cyclists, and pedestrians moving unpredictably. During rush hour, this complexity becomes even more pronounced, making it difficult for autonomous systems to anticipate and react effectively.

Furthermore, urban environments have various obstacles that can confuse sensors, like temporary road closures or construction. Dealing with these dynamic elements requires highly sophisticated algorithms. Although driverless technology is improving, these chaotic urban settings highlight its current limitations. The challenges of navigating through crowded, unpredictable intersections remain a major barrier to achieving reliable, fully autonomous driving in every setting.

Public Perception And Trust Issues

Another challenge is public trust. If people don’t feel safe riding in a self-driving car, the technology will struggle to gain widespread acceptance. Trust is crucial, and without it, adoption rates will remain low.

Incidents where autonomous systems have failed only add to the skepticism. News reports about accidents involving self-driving cars often make headlines, leading to fear and uncertainty. These incidents, while relatively rare, shape public perception negatively.

To build trust, companies need to prove that autonomous vehicles are genuinely safer. This involves transparency in reporting test results, addressing failures openly, and continuously improving safety features. Effective communication, combined with successful trials, can help bridge the trust gap. Until public trust improves, the adoption of self-driving cars will continue to face significant hurdles.

Legal And Ethical Considerations For Autonomous Vehicles

Who Is Liable In Case Of An Accident?

This is one of the most complex questions about autonomous vehicles. If a self-driving car causes an accident, who is liable? Is it the manufacturer, the software developer, or even the passenger who was supposed to supervise?

Currently, laws are struggling to keep pace with technological advancements. Most regulations were written for traditional vehicles, where liability is usually clear-cut. Now, with autonomous systems involved, responsibility becomes more diffuse, which makes determining accountability challenging.

Moreover, without clear guidelines, insurance companies face difficulties in underwriting policies for autonomous vehicles. This legal ambiguity creates hesitation among manufacturers, developers, and potential users. Until lawmakers establish clear rules, liability issues will remain a significant barrier to widespread adoption of autonomous driving technology.

Ethical Dilemmas In Programming Decision-Making Algorithms

Beyond the legal, there are significant ethical issues. For instance, how does a car decide who to protect in an unavoidable crash scenario—the passenger, the pedestrian, or other road users? This decision-making process becomes even more challenging because it involves moral choices that have no perfect answers.

These ethical dilemmas are tricky to code because every culture may prioritize values differently. For example, some might argue that protecting the passenger should be paramount, while others might prioritize minimizing overall harm. This moral complexity makes programming such algorithms extremely difficult.

Moreover, the lack of consensus on ethical priorities is a major roadblock for developers. Until there is widespread agreement on how autonomous vehicles should handle these situations, achieving Level 5 autonomy remains out of reach. This is yet another reason we don’t yet see Level 5 cars cruising through town.

FAQs about Autonomous Vehicles

What Are Self-Driving Cars And How Do They Work?

How Safe Are Autonomous Vehicles Compared To Traditional Cars?

What Are The Different Levels Of Car Autonomy?

Which Companies Are Leading In Driverless Technology Development?

What Are Some Challenges Faced By Autonomous Vehicles Manufacturers?

What Role Does AI Play In Autonomous Vehicles Systems?

What Are The Benefits Of Using Driverless Technology?

How Are Legal Issues Being Addressed For Autonomous Vehicles?

What Is Vehicle-To-Everything (V2X) Communication?

What Does The Future Hold For Autonomous Vehicles?

The Future of Autonomous Vehicles: What’s Next?

Self-driving technology is advancing, but we still have quite a journey ahead before autonomous vehicles become a part of daily life for everyone. Expect to see advancements in artificial intelligence, improved infrastructure, and possibly new regulatory frameworks that support broader adoption. And while we may not see fully Level 5 driverless cars on every street corner tomorrow, the day when autonomous vehicles become a normal part of urban mobility is certainly coming.

The reality is that self-driving cars hold the potential to revolutionize the way we move, cut down on accidents, and make transportation accessible for people who are unable to drive. But just like with any tech breakthrough, we’ve got some kinks to iron out. Until then, keep your eyes on the road, and maybe let your car do the same—just not without you behind the wheel (for now).